In the previous post we explained about the background and motivation for implementing the adaptive video streaming methods.

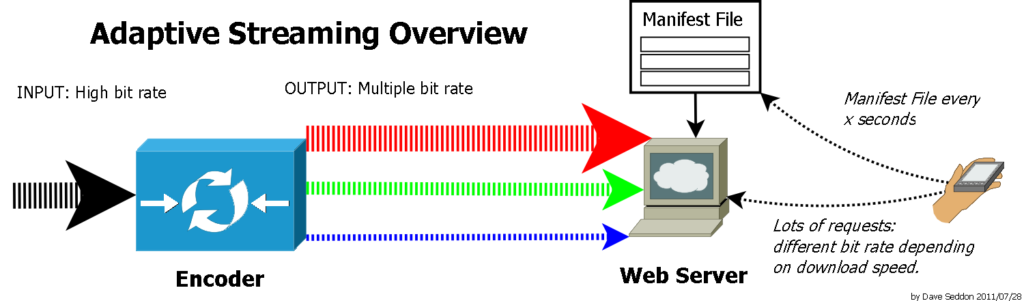

In short – it enables viewers with different internet bandwidth to view the most suitable video quality. It also allows the video to continue to play without stalls due to changes in the available network bandwidth.

Requirements

The main requirements for implementing adaptive streaming are as follow:

- Creating multiple qualities of the video. These different qualities will usually use different resolutions and encoding parameters, and are called renditions or representations. This requirement was already implemented for sites that allowed the user to manually select different video qualities.

- Segmenting each one of the different qualities. You can imagine this segmentation as if the video was cut into short pieces of video, each one of them is called a segment. The segmentation should create points in time in which the video quality can safely be switched. Each segment should start with a key frame, which is a frame that is fully represented in the video stream, and not just incremental information based on the previous frame. In case a segment will not start with a key frame, the video playback will be corrupt, and various rectangles in different colors will be shown. Each adaptive streaming protocol recommends a different segment length.

- Create manifest files. Manifest files describe the available video qualities and their attributes, like resolution, codec, bandwidth. They also describe how the different qualities are segmented.

- Implement/Use an adaptive video player. An adaptive video player is a video player that implements a logic for switching between the different video qualities. The player should measure the available network bandwidth, and select the most suitable quality according to the measured bandwidth. It can also consider the display resolution or CPU usage when deciding which quality is best.

Available adaptive streaming protocols

All adaptive streaming protocols have many things in common. They all have multiple segmented video qualities, manifest files, and a player that supports the format. The main difference between the different protocols is their manifest format. Each adaptive protocol comes with different recommendations. I will mention some of the adaptive protocols I worked with, their manifest format and recommendations. The list is sorted by relevance, as I see it (most relevant format at the end):

- Adobe HTTP Dynamic Streaming

The first adaptive streaming protocol I encountered was the Adobe HTTP Dynamic Streaming, and It was back in 2011, when we were developing a P2P CDN system and one of our customers was using this protocol for online streaming on PC.

This protocol uses a “.f4m” XML manifest file, containing the available qualities, and a base64 encoded binary information that describes how the videos are segmented. It requires custom code/plugin on the web server that serves the videos. The default (and probably recommended) segment (or fragment, according to the protocol’s terminology) length of this protocol is 4 seconds. This length is actually seem to be optimal for adaptive streaming in general. The different segments are represented by using a url template that contain the video filename and ends with a “SegX-FragY” pattern, where Y is the segment index and X is the fragment index. I never saw videos that uses more than 1 “segment”, so the pattern was usually “Seg1-FragX”. So if you have a 30 minutes video with 4 seconds segments, you will end up with 450 segments. Having 450 segments means that you will have 450 urls, ending with “Seg1-Frag1”, “Seg1-Frag2”, “Seg1-Frag3”, …, “Seg1-Frag450”. I didn’t encounter this protocol on “smart devices”, like smart phones or smart TVs. - Microsoft SmoothStreaming

Microsoft SmoothStreaming uses a XML file to describe the available different qualities and how they are segmented. From what I’ve seen, all the different qualities are fragmented exactly the same locations, allowing smooth transition as you can switch to any of the different qualities where any segment begins. The manifest describes the length of each segment using a XML element, with the ability to specify a repeat count for consecutive segments having the same length. It can also specify a “-1” repeat count for specifying that all the remaining segments use the specified same length. The recommended segment length of this protocol is 2 seconds (which I find to be too short). The different segments urls are also pattern based, having the default pattern like “QualityLevels(<bitrate>)/Fragments(video=<segment time offset>)”, where “<bitrate>” is the bitrate of a specific quality, and “<segment time offset>” is the time in the video at which the segment is starting. The default timescale of SmoothStreaming manifests is 10,000,000. So if you have a 30 minutes video with 2 seconds segments, you will end up with 900 segments. Having 900 segments means that you will have 900 urls, specifying a “segment time offset” of “0”, “20000000”, “40000000”, …, “18000000000”. This protocol is supported on smart TVs, and on custom video players for Android smart phones/TVs. The main support for this protocol on PCs was implemented using Silverlight which seem to be not supported by the most popular web browsers – Chrome and Firefox. - MPEG-DASH

MPEG-DASH is the latest member of the popular adaptive streaming protocols. While it’s still not the most popular protocol (Mainly because Apple’s devices natively support HLS and no other protocol, so if I needed to select which protocol to use for my mobile application I would definitely choose HLS and not MPEG-DASH, so I could use one protocol for all devices). MPEG-DASH is the most complex protocol, as it can represent any form of url types, and is not limited to using url patterns. It uses a XML file format that specify the available qualities, and how each quality is segmented. This protocol doesn’t enforce that the segments of all the qualities will be aligned to the same locations, and in some cases in order to switch between qualities some data will be discarded, when a segment of a certain quality starts in between a segment that was already downloaded. This format supports various url pattern types, which can be time offset based (like SmoothStreaming), or index based (like Adobe HTTP Dynamic Streaming). It also supports binary segment specification, where all the segments can be found in the same file, and the list of the different segments and their locations in the file is also present in the video file. Using this approach the different segments can be accessed by requesting different ranges inside the file, and the binary list of the segments is called “index range”, because the XML manifest specifies the range in which the segment index is found. The MPEG-DASH format, is currently used by Youtube (but without the actual XML manifest. It seems that the player is getting the manifest information in some other way) and is using the Index-Range approach. MPEG-DASH can be used in order to represent the same metadata as any of the other protocols. - Apple HLS (HTTP Live Streaming)

First of all – the HLS format is used not only for Live content, but also for VOD content. It is natively supported by all Apple’s devices (and I think that no other protocol can currently be used on Apple’s devices). The HLS protocol uses 2 layers of manifests (or playlists), in “m3u8” format. The first one is the master playlist, which describes the available qualities and specifies where the playlist of each quality can be found. The second manifest layer is the playlist of the different qualities. Each playlist contains a list of urls of the video segments of the quality, and the length of each segment. This protocol doesn’t support patterns, and it requires to actually list all the segments of all the qualities. Apple recommends a segment length of 10 seconds, which is waaaay to long in my opinion. A 10 seconds segments don’t allow the player to respond fast enough to network changes (as it needs to download 10 seconds of video, and in case the available bandwidth is too low – it will can take much more than 10 seconds to download the segment, and might end up in stalling).

Conclusion

If I need to choose which protocol to use, I would go with MPEG-DASH, as it supports segment listing (like Apple HLS), segment template/pattern (like Adobe HDS and Microsoft SmoothStreaming), and also supports the in-file segment indexing. The downside of selecting MPEG-DASH, is that you have to use a third party player (which is supported by both iOS and Android), or develop your own player, because most devices will not natively support MPEG-DASH. But, bringing your own player is also a good thing, because it lets you control the player behavior, and maintain a constant user experience across all the devices because you run the same player logic on all the devices, and you don’t get a different implementation if you rely on different native player implementations. I would use the Index-Range feature of MPEG-DASH, as it maintains all the information required for playing a certain quality in one file, or use one of the segment template format. The up side for the segment template format is that it contains all the information in a compact way, and in one manifest file, so you get the entire metadata of all the available qualities in one file request. I would use a 5 seconds segments, as I find it to be optimal for responding to network changes from one hand, and doesn’t result in high overhead caused by making too many requests.

Player implementation

The most important component for any adaptive video streaming protocol is the video player. A bad adaptive video player implementation will result in bad video experience for the user, no matter which adaptive protocol is used. The most important feature of an adaptive video player is to measure the available network bandwidth correctly, and select the most suitable video quality for the measured bandwidth. A good adaptive video player will consider multiple variables besides the network bandwidth when choosing the video quality, in order to achieve the best viewing experience, which is playing the highest sustainable video quality, and totally avoid stalling.

To be continued…

In the next post I will list some of the issues I encountered in different adaptive video player implementations, and will give my own guidelines and recommendations for best video experience.